What Students Need in the AI age

Part 2 of my 4-part series: Teaching and Learning in the AI Age - Outlining Challenges and Opportunities

In part one of this series on Teaching and Learning in the AI age, I outlined what TEACHERS NEED to KNOW, DO, and KNOW HOW to do. The feedback from my recent workshops confirms that teachers are becoming increasingly aware of reality: yes, teachers need training, guidelines, and time to experiment in order to attain AI competence (and some things I hadn't mentioned - like tool licenses, stable and functional technical infrastructure and supportive leadership).

Interestingly, not enough have actually spent class time talking ABOUT generative AI or laying down ground rules for use in class or in assignments, and even less are creating lessons where students are challenged to use AI safely and ethically. Not enough educators are asking: 'What about the students - what do THEY need?'

Even while educators grapple with their own AI learning curve, our students need guidance now more than ever. They need educators' expertise and competence. They're already using AI tools, often more intuitively than we do, but technical facility doesn't equal wisdom.

How do we prepare them for an AI-augmented world? What skills do they really need? And most importantly, how do we ensure they don't just become efficient AI users, but ethical, critical thinkers in this new landscape?

Let's explore what students need - not just to survive, but to thrive in the AI age.

We’ll explore:

What students need from us teachers

- to know HOW to use AI safely

- to know HOW to use AI responsibly

- to know HOW to use AI ethically

and beyond that

- they need rules and expectations

- they need to be motivated to learn

- they need opportunities to practice

Let’s dive in.

Part 2: What students need

As with teachers, there are several things students need to know, to do and know HOW to do.

Need to know »

Just like teachers, students need to gain AI literacy. This includes understanding how generative AI works, how these models are trained on data, why AI can produce inaccurate information (hallucinations) and biased results, and what standard multimodal functionalities exist. They need to explore alternatives to ChatGPT, understanding the similarities, differences, and limitations of each tool.

Information literacy must include a clear understanding of data privacy. Students should learn how companies use data from these tools, recognize the risks of uploading personal or sensitive information, and understand that everything shared becomes part of their data ecosystem - potentially viewable and usable by others.

The ecological impact of AI must be part of these discussions. Students need to understand that training and running these models requires massive amounts of energy and computing power. This awareness can lead to more mindful and purposeful use of AI tools.

There are many educators who haven't had 'the talk' with students yet about AI, and some colleagues have disagreed with my approach of holding open conversations about these realities, preferring to not talk about it all. I'm fortunate that in Austria, information, digital and AI literacy are part of the secondary school curriculum through the subject I teach 'Digitale Grundbildung' (Foundational Media Training). This allows me to dedicate time to discussing generative AI and having students experiment with various tools. With parental consent, students can access AI tools using their school accounts or use AI within virtual rooms made available by teachers using things like magicschool.ai or fobizz.com.

I respond to criticism with simple facts: AI literacy is part of the curriculum and part of students' reality. These tools are freely available on their mobile phones (ChatGPT is even now accessible in WhatsApp), and teens are already getting advice (often about unethical use) from peers and social media platforms like TikTok. Not addressing these realities openly would put us at a disadvantage. Moreover, open discussion sends a clear message to students: we understand these tools and their implications.

Need to Hear and Understand »

What do students need to hear from us? First and foremost, they need to understand what generative AI tools truly are: digital aids for certain processes, not a replacement for critical thinking or work where personal effort is the point (as Ethan Mollick so succinctly puts it).

Students need to understand how learning works - and why pressing the 'AI button' isn't always the answer. Real learning often requires productive struggle - those challenging moments of working through confusion that lead to deeper understanding. While AI can be a valuable tool, bypassing this mental effort by immediately seeking AI solutions can prevent the development of genuine comprehension and critical thinking skills. We may have never had to talk to students about how learning works before, but the age of AI makes this conversation essential. Students need to understand that while AI offers quick solutions, real learning often requires effort and even confusion. This productive struggle isn't just busy work - it's the vital process that builds lasting understanding. Once students grasp this, they're better equipped to make smart choices about when to use AI and when to embrace the challenge of figuring things out themselves.

Equally important is understanding that AI is fundamentally software and algorithms - strings of code designed to pattern-match and predict responses. While chatbots may seem friendly and understanding, they're not sentient beings capable of true empathy or friendship. This distinction is crucial, especially as we see concerning cases of teenagers developing emotional attachments to AI chatbots, mistaking sophisticated pattern recognition for genuine human connection. We need to help students understand that while AI can be a useful tool, it can never replace the depth, authenticity, and growth that comes from real human relationships and interactions.

Lastly, and perhaps most importantly, students need to understand that their authentic voice matters. While AI can generate polished prose and seemingly perfect essays, what we teachers truly value are students' unique thoughts, ideas, and expressions of their beliefs. Each person has a distinct perspective and way of communicating that no algorithm can replicate. We're not seeking perfection - we're seeking authenticity. Students need to hear this clearly: letting AI replace their voice means losing something precious - their individual way of seeing and expressing their understanding of the world. The occasional awkward phrase or imperfect paragraph that shows genuine thinking is far more valuable than flawless, AI-generated content that lacks personal insight. Only through the act of writing can students become competent enough to know what is GOOD writing.

Need to Learn and Practise »

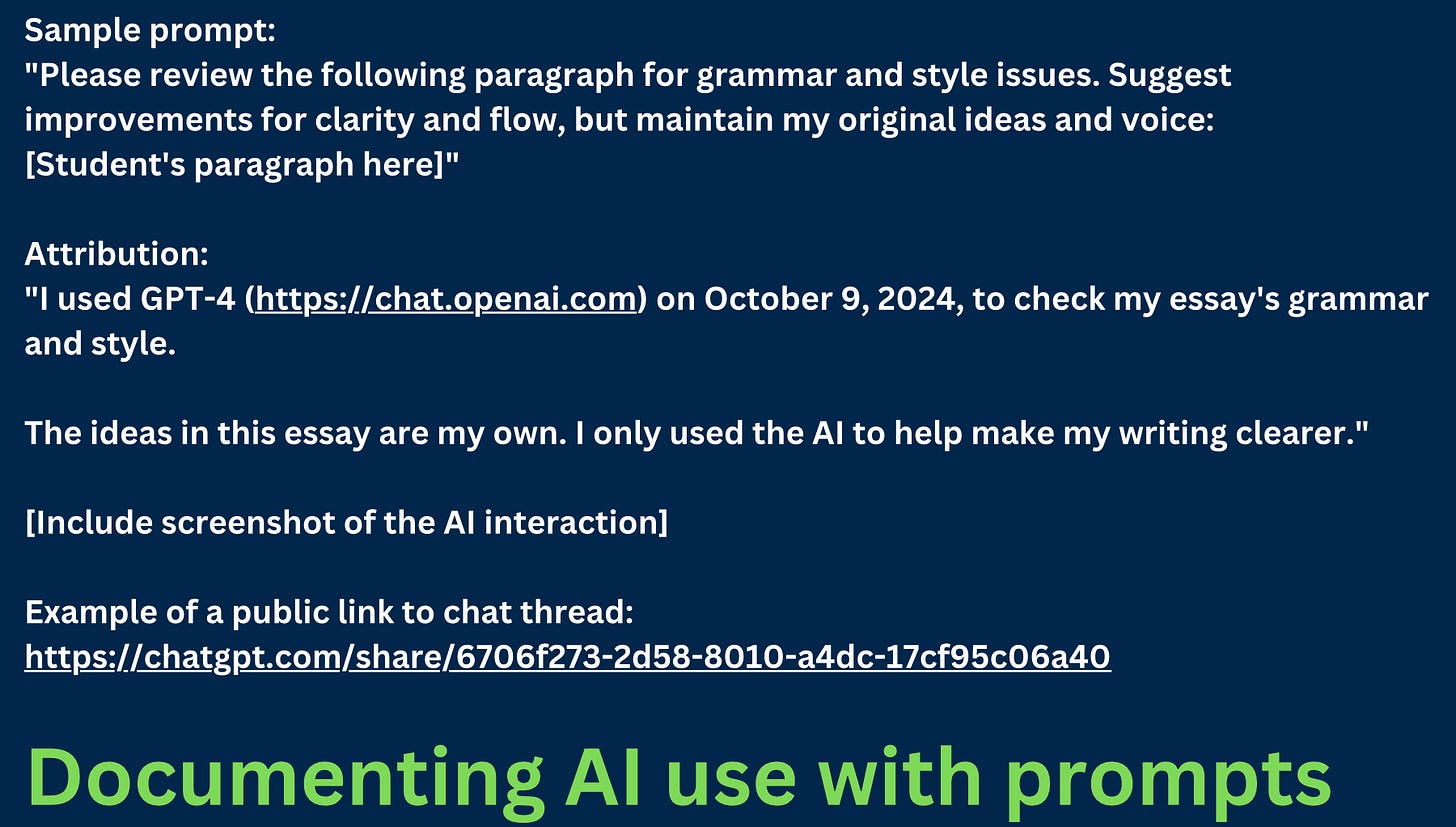

Students need to master both effective prompting and critical verification skills. While AI tools like ChatGPT are increasingly replacing traditional search engines, students must understand that getting good results isn't just about crafting the right prompt - it's about knowing how to verify and evaluate the information they receive. Fortunately, with integrated web search capabilities, fact-checking AI-generated content is becoming easier. Yet the fundamentals remain crucial: students must learn to identify credible sources, cross-verify information, and evaluate search results critically. AI should make fact-checking more efficient, not less thorough.

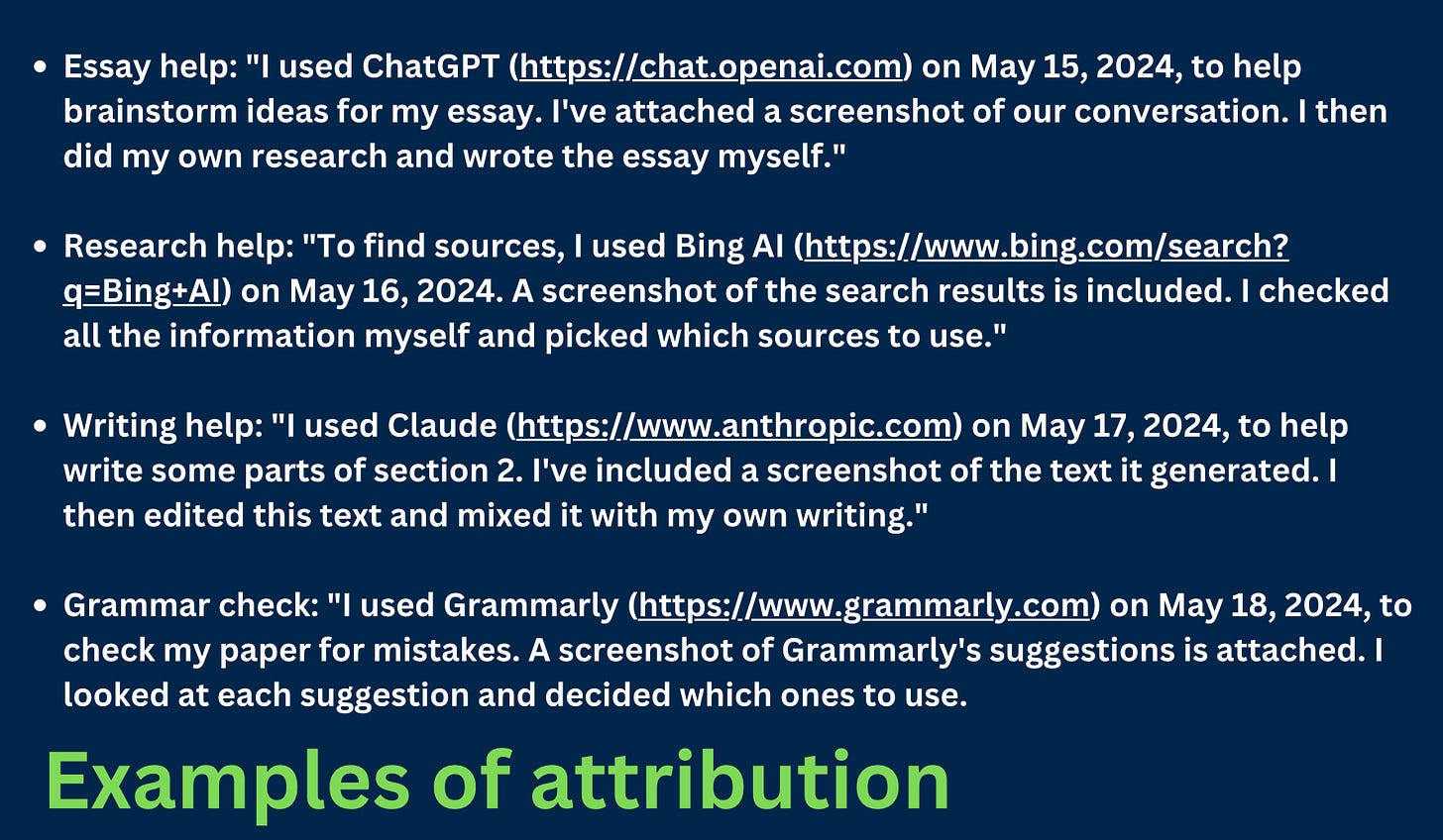

This goes hand in hand with the need to know how to document and cite AI use properly. Many institutions worldwide have developed clear guidelines for AI attribution, ranging from simple 'dos and don'ts' lists to comprehensive documentation frameworks. These guidelines typically outline when and how AI can be used, and most importantly, how to cite it transparently in academic work. For example, Christian Spannagel's detailed framework for university students provides an excellent model: it specifies how students should document which AI tool they used, what inputs they provided, and how they incorporated the AI-generated content into their work. Students need to understand that using AI isn't prohibited - but using it without proper attribution is.

It will be helpful to provide students with specifics, also within assignments, on what usage we accept and why. Also, including use cases for AI makes our expectations clear. For example, AI may be used to

Generate initial ideas

Create outlines and structures

Find and summarize sources

Get explanations of complex topics

Improve phrasing

Check grammar and spelling

Get suggestions for clearer writing

Receive feedback on your work

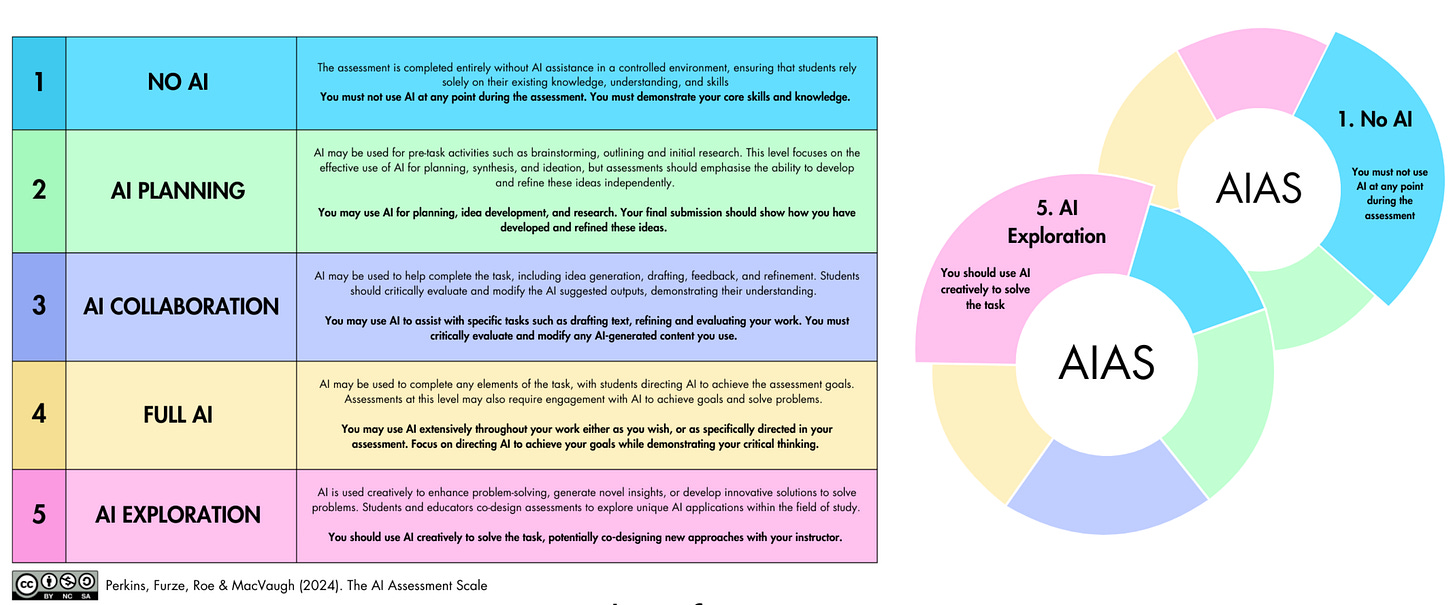

Leon Furze's AI Assessment Scale helps teachers and students understand different levels of AI use in academic work, ranging from basic assistance to more complex applications. The scale:

Distinguishes between different levels of AI use:

Basic (like grammar checking)

Intermediate (like brainstorming)

Advanced (like analysis and synthesis)

Helps define appropriate use:

What's acceptable in different contexts

How to properly attribute AI assistance

When AI use might be inappropriate

Provides clear guidance for:

Teachers setting assignments

Students using AI tools

Assessment criteria

I provide my students with concrete examples of AI attribution (see screenshots) and guide them through sample activities that demonstrate proper documentation. Seeing real examples of how to cite AI use - with specific tools, dates, and purposes - helps make these requirements clear and actionable.

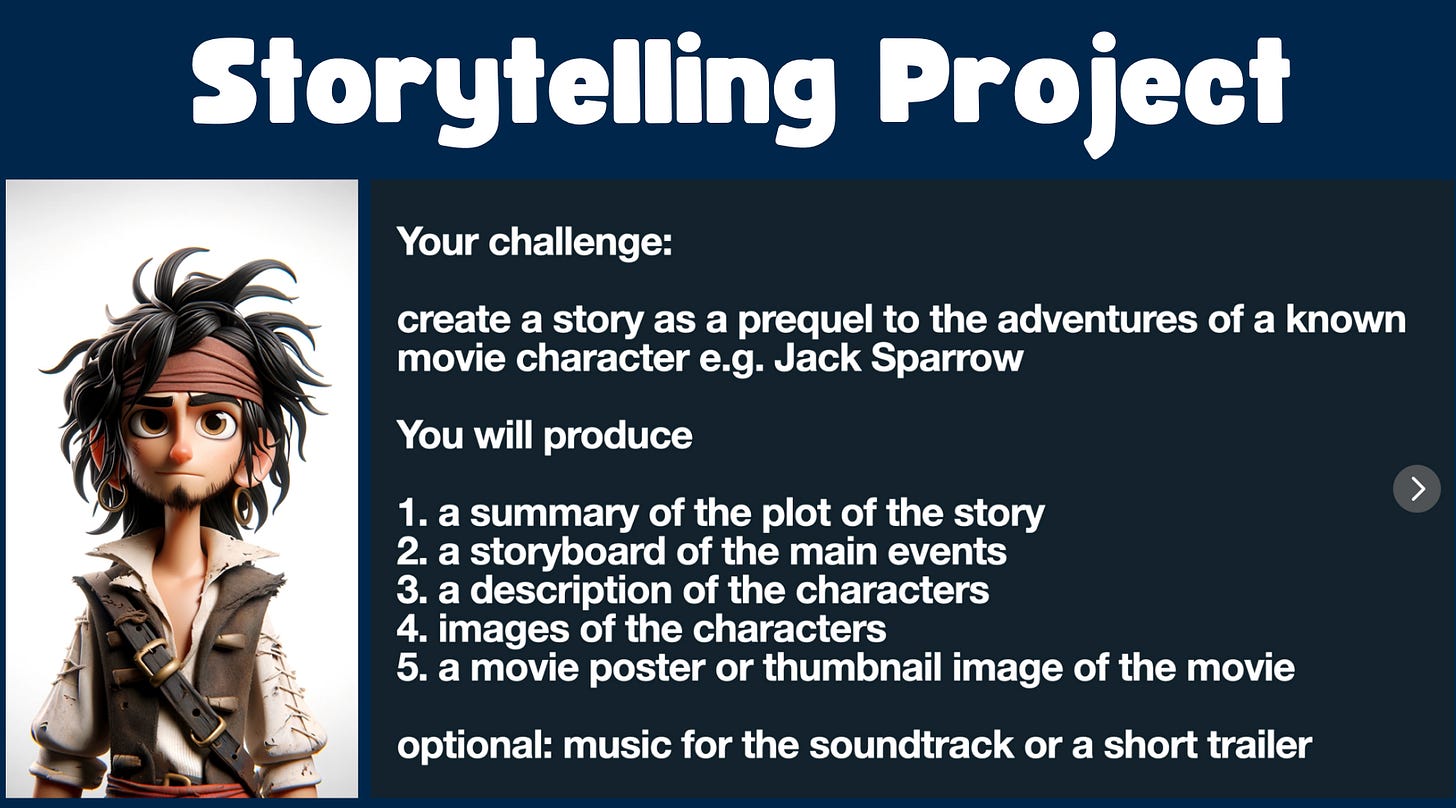

Need opportunities to practice »

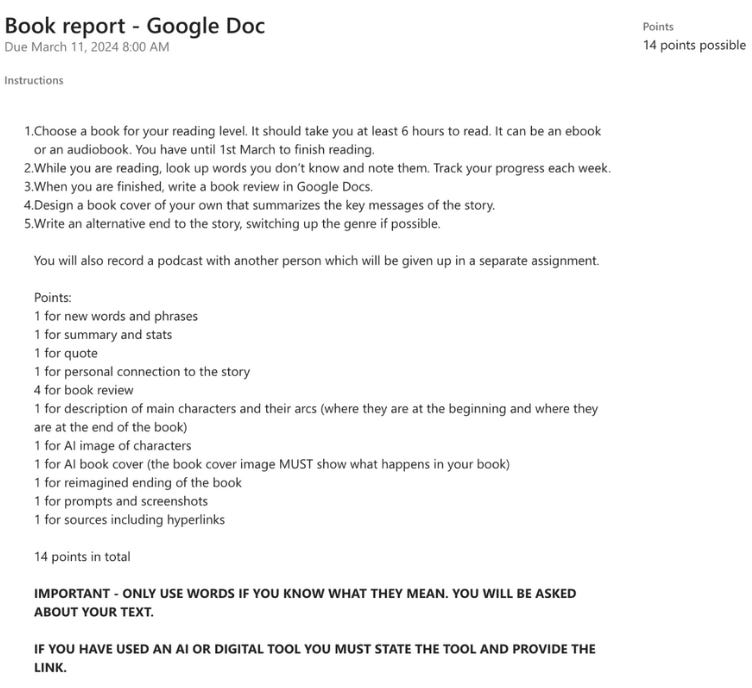

It remains essential in my view to design lessons and activities where the use of AI is part and parcel of the learning - so that we can properly supervise and accompany their learning of AI use. For language learners, this might mean creating a podcast where students use AI at multiple stages: drafting the initial story, creating a script, and designing podcast artwork. For literature classes, students can enhance their book reports by generating character images and book covers, then creating discussion podcasts with peers (see screenshot). In creative writing, students explore character arcs using AI to complement their storytelling process. These structured activities not only teach subject content but also guide students in discovering both the potential and limitations of AI tools while developing critical thinking about when and how to use them effectively.

In each case, students were required to attribute their AI tool use with dates, links, prompts and screenshots. This consistent practice of documentation not only builds good habits but helps students understand that AI is a tool to enhance, not replace, their own creative and academic work.

Our Students Need US

As we guide our students through this AI-transformed educational landscape, three fundamental needs emerge again and again: the need to use AI safely, the need to use AI ethically, and the need to use AI responsibly. Safe use means understanding privacy implications and protecting personal data. Ethical use means maintaining academic integrity through proper attribution and preserving one's authentic voice. Responsible use means knowing when AI can enhance learning and when it might hinder genuine understanding.

Our role as educators is to provide the framework for these three essential aspects. Through clear guidelines, structured practice, and open discussions, we can help students develop the discernment they need for safe AI use, the integrity they need for ethical AI use, and the wisdom they need for responsible AI use.

In the next part of this series, we'll explore HOW teachers can effectively demonstrate and teach these principles in their daily practice. Until then, I encourage you to start these important conversations with your students. How are they approaching AI use? What guidance do they need to use it more safely, ethically, and responsibly? Share your experiences in the comments below - let's learn from each other as we navigate this transformation together.

Thanks for reading!

Title image by Canva